Indexing a site in search engines means that the pages of your site are taken into account by the search engine, or, in other words, found in the database of the Google engine. So having a non-indexed site means that it will not show up in Google search for any internet searches.

Search engines in 2024 still have problems indexing the content of some sites, which do not meet their quality standards and which are more difficult to read and interpret by crawlers (such as Googlebot – Google’s robot). That’s why it’s crucial to make sure your content is “indexable” – accessible and interpretable by these engines.

Thus, the indexing of all important website pages is the first and most important KPI in SEO.

It is important to mention right from the start that indexing does not mean ranking in the top, but only being present in the results. For ranking, you also have to do SEO optimization.

How do you check if a site is indexable?

To see how many pages of your website Google has indexed, use the following formula: “Site:seolitte.com”. You can see the number and list of pages indexed on a given domain by adding additional query parameters. For example site:seolitte.com inurl:seo, will show only those pages in the Google index that contain the word “SEO” in the URL. This number will fluctuate. But it’s a good way to refer to Google’s index for a particular site.

You can use Google’s Google Cache tool to discover how your website is stored in Google’s cache. This way, you’ll be able to see exactly which elements of your content are indexable, but also which elements you need to get rid of to make it easier for search engines to understand.

Using the ScreamingFrog tool, with the help of the “Non-Indexable URLs in Sitemap” option, you will see which URLs are not indexable by search engines. From here, you will know the direction to improve the site.

Reasons for partial and/or erroneous indexing

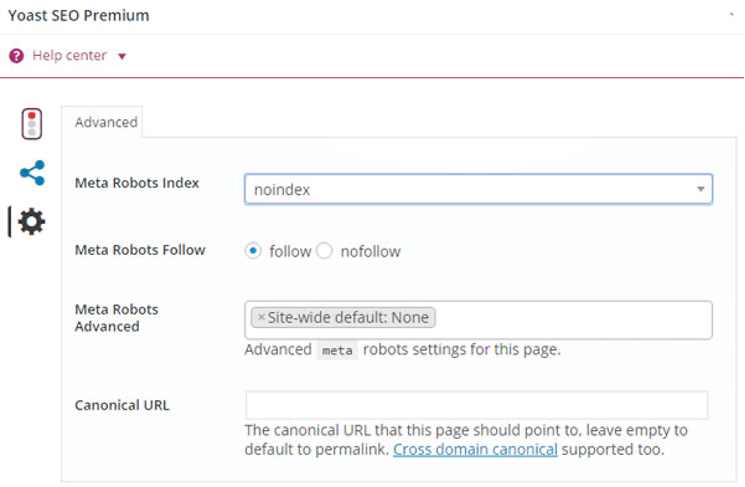

Things can be very simple in some cases. For example, the programmers simply forgot to allow the site to be indexed, that is, they put the “noindex” tag, which tells the robots not to include the given page in their indexes.

This is how you block engine crawlers or stop indexing. It could also be something with the robots.txt or meta-robots tags, a problem with hosting/uptime, a DNS problem, or a series of technical defects.

The presence of a sitemap in .xml format

The role of the sitemap on the website is to contribute to the indexing of all its pages. This is important for improving online visibility.

Sometimes, not all of a site’s URLs can be found and indexed by search engines – for various reasons. A map like this will tell Google’s bots which pages to index. That is: to enter into his database.

Duplicate content

The Panda algorithm update, which penalizes the existence of duplicate content, was released back in 2011. The filter helps reduce low-quality sites from the results. Thus, highlighting the most relevant ones for the subject sought. Google Panda claims that it only takes a few pages with poor quality or duplicate content for the entire site to be affected. Google also urges that those pages be deleted or blocked. This is to avoid being indexed by the search engine.

Automatically generated content

Google is not keen on creating self-generated content either. First, let’s explain the term “auto-generated content”. This is content that was randomly generated, without meaning to users but containing keywords. Of course, Google doesn’t appreciate this and can penalize or de-index these types of sites.

So, Google prefers genuine and good-quality content. If one page’s information matches another page’s information, Google will consider it to be duplicate content. And this can affect your search engine rankings. There is also a risk that pages with such content may not be indexed at all (ie stored in Google’s database).

So if you have a lot of errors, the site has duplicate or irrelevant content, or you’re penalized by Google – things get very complicated. At the same time, you should know that if the site is new, then in the first 6 months it will be less visible to the search engine. The authority and notoriety of a web page is only built over time.

You don’t have enough backlinks

To get proper indexing from Google, you need to actively invest in building backlinks as well. Here, you have basic options: business directories (where you can add information about your business and website), take advantage of the popularity of social networks (they will help you gather authoritative backlinks), and last but not least turn, to collaborate with the mass media.

You don’t practice internal linking

Inter-linking – given that without proper internal structure, website visitors will be as confused as Google. Here the rule sounds quite simple: to be easily “understood” and indexed by Google, the internal link structure must be easy, and convenient for visitors to navigate. Also, each page must link (give it trust votes) to another page similar in content. That is, a complimentary page, or of maximum importance for the entire website. Navigating any site becomes practically easier, and more user-friendly if links to the relevant pages are included. But also to similar products.

Indexing a site in search engines: things to pay maximum attention to

Optimizing for mobile devices

Sites that are not user-friendly and for mobile devices (mobile-friendly) are penalized by Google. Why? Google currently only indexes the mobile version of a site. If it doesn’t exist, Google won’t even know your site exists.

Google will prioritize the mobile versions of the sites. Excellent mobile loading speed, responsiveness, and adaptability are the basic requirements. If the loading speed is slow, then the user will reject your web page. Bounce rate and time spent on the page are the main parameters that Google takes into account when analyzing your site.

You already know that the site must be mobile-friendly. It provides added value to users. If you have an online store, create mobile apps and informative articles. The one who invests in a pleasant user experience will have a lot to gain in the long run. Especially if it also invests in promoting the site in search engines.

Use of Google Analytics and Search Console tools

These 2 programs improve the indexing of your pages. Which means it directly influences the ranking algorithm in Google.

Google Search Console in addition to showing the no. total clicks, impressions, the level of CTR (Click-through Rate), and the position of the site in the search engines, it identifies the errors that the site is facing. And the ones that don’t allow it to be indexed properly by Google. After a complete analysis, this tool also provides recommendations for solving the problems it has identified.

Conclusions

Knowing how many pages are getting traffic from organic search engine results is critical to monitoring overall SEO performance. From this number, we can see data about indexing – the number of pages on our site, which the engines keep in their indexes. For most large sites (50,000+ pages), simply getting indexed is critical to gaining traffic. This value provides quantifiable data that indicates SEO success or failure. As you work on things like site architecture, backlink acquisition, XML sitemaps, content uniqueness, and metadata, your traffic should increase. This shows that more and more pages are gaining positions in search engine results.